Tech Series

This article is the first of a technology series that I will be posting in the near future. I will be writing about AWS, Serverless, NodeJS, Security and a few other topics.

The idea behind this series is to demonstrate how CyberCube is making use of Serverless and AWS Services.

Hopefully, with this set of articles, the reader will have a clearer view of how those services can be utilized to design a Cloud Platform.

Using AWS SAM for end to end local developing and testing

In this blog, we’ll discuss how we can use AWS SAM to develop a serverless back-end and test it via a web front end.

Being able to run your whole solution locally is a pretty big deal. It allows you to save a lot of time because you don't need to deploy your Lambda functions, Layers and your WebUI. So, instead of uploading your Lambdas and Layers to AWS and your UI to CloudFront, you will just need to run SAM for your back-end and most likely NPM for hosting your front-end.

By creating SAM templates, you can test and debug your Lambda functions and Layers, while accessing all the necessary other services that you depend on (Aurora, DynamoDB, S3, ECS, ES, etc...)

We use this here at CyberCube in order to cut down on deployments and faster response to new features, bug fixes, etc. Once the local instance is running smoothly, we can easily run a Jenkins job to deploy everything onto AWS (it takes only a few minutes).

By running everything locally, we can also achieve functional independence. That is, the person(s) coding the front-end can just pull the latest back-end code, build it, run it and test their changes right away.

Installing AWS SAM

First, we need to install the AWS SAM CLI tool.

The following will work on Linux (I use Debian 10) but I believe it’s fairly similar on Mac OSX.

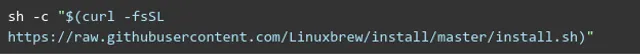

We’ll use Homebrew to install the CLI. SAM can be installed via Pip3, but in my case, I ended up picking Homebrew.

This command will download the latest Homebrew package and install it. You do not need to be root to use this.

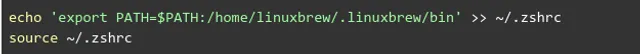

Now, in order to use it from anywhere, we can add it to the PATH. In my case, since I use ZSH I add the path to the .zshrc. If you use bash, you’d update .bashrc.

Once that is done, if you don’t want to close the terminal, you can simply type:

Now, we’re ready to install SAM

One SAM is installed, you can run [sam --version] and make sure that you have a version greater or equal to 0.21.0.The reason for that version of SAM will be discussed shortly.

Dependencies

In order to run SAM you will need to have docker installed. I’m not going to talk about Docker installation because there are different ways to do so, depending on the Linux distro or even the OS.

There are many links that describe how to do this, for example:

Creating a template

You will need to create a template in order to build your Lambda functions.

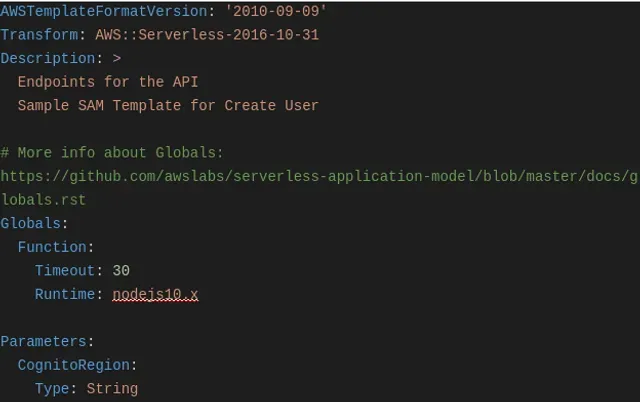

We will start with a simple template.yaml

You can find the complete template.yaml here

In this template, we have defined the API and one function (GetInfo)

As you can see the function refers to layers, which are more or less libraries (or modules in the case of NodeJS)

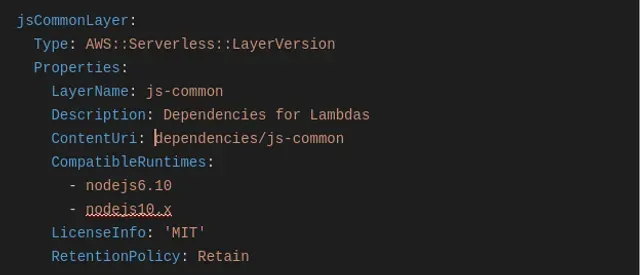

Layers are defined as such:

Since layers are beyond the scope of this article, I won’t spend time on them.

Now, that you have your template, you need an environment variables definition file.

Local Environment

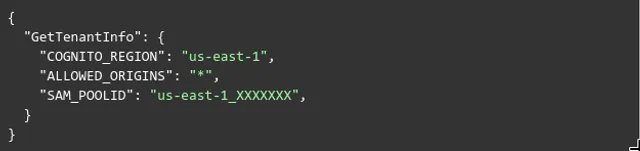

You will need to create a file that contains the environment variable definitions per function. I named mine local.env.json:

Once this is done, you can build your API.

Building the API locally

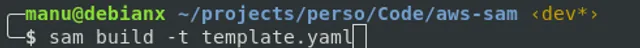

That is done very easily:

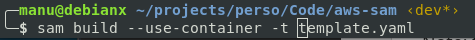

or

The first command has the advantage of building the API quite a bit faster.

Note: that if you change the code of a function, you do not need to rebuild or restart the container

All you need to specify is the name of your template file, which in my case is template.yaml

Running the API

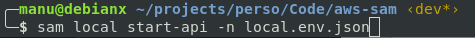

Again, this is very easy:

At this point, if you have a Web UI running also locally (npm start for example), you’ll need to have it point at: http://localhost:3000/

Port 3000 is the default port but you can change that via an option on the command line.

CORS

Right now, we should have a Web UI up and running as well as the Serverless back-end.

Chances are that your UI and Lambda functions will be CORS enabled and with the current template, the browser will throw an error because the endpoint you queried (in our case /common/getinfo) returned a 403.

If you look at the output from sam local start-api, you will see that it serves: http://localhost:3000/common/getinfo [GET] but there’s no OPTIONS method.

At that point, you can go online and search for SAM and CORS issues and spend hours looking for a solution, looking at threads from 2017…

This is a rather frustrating issue which used to be solvable by using some extra Lambda function and a lot of things in the template.

Since SAM v0.21.0 (I mentioned the importance of the version earlier), the whole thing can be solved very easily.

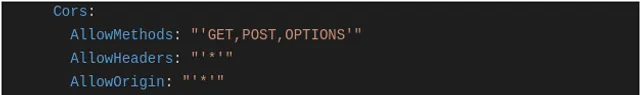

In your template, you’ll first need to add the following to the API definition:

Please refer to the template.yaml that is provided to see where the "Cors" element is added.

Note: If you use SAM 0.21.0, you don’t need the single quotes, but if you use ^0.22.0, those quotes are required.

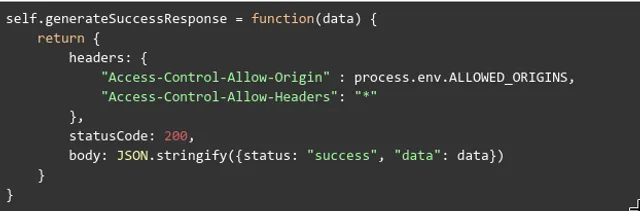

The remaining step is for your functions to return the appropriate headers. In my case, I have created a couple of helper functions in one of my layers and it will return whatever payload you give it and prefix that with the proper CORS content:

This function is located in the js-common layer (api_common.js)

Once that is done, you can rebuild your API and run it.

If you look at the output from sam local start-api, you will now see that it serves: http://localhost:3000/common/getinfo [GET, OPTIONS].

When you make a call to that function, two things will happen:

- OPTIONS will return a 200

- GET will return a 200 (if successful or course)

Your WebUI and browser will be happy and you will now have a fully functional local serverless back-end and local WebUI working and talking to one another.

.png)